A new study examining bias in AI-generated video reveals that the leading AI video creation tools significantly underrepresent women in the legal profession, depicting female lawyers at rates far below their actual numbers in the workforce.

AI videos also underrepresent lawyers of color, although by a lesser percentage.

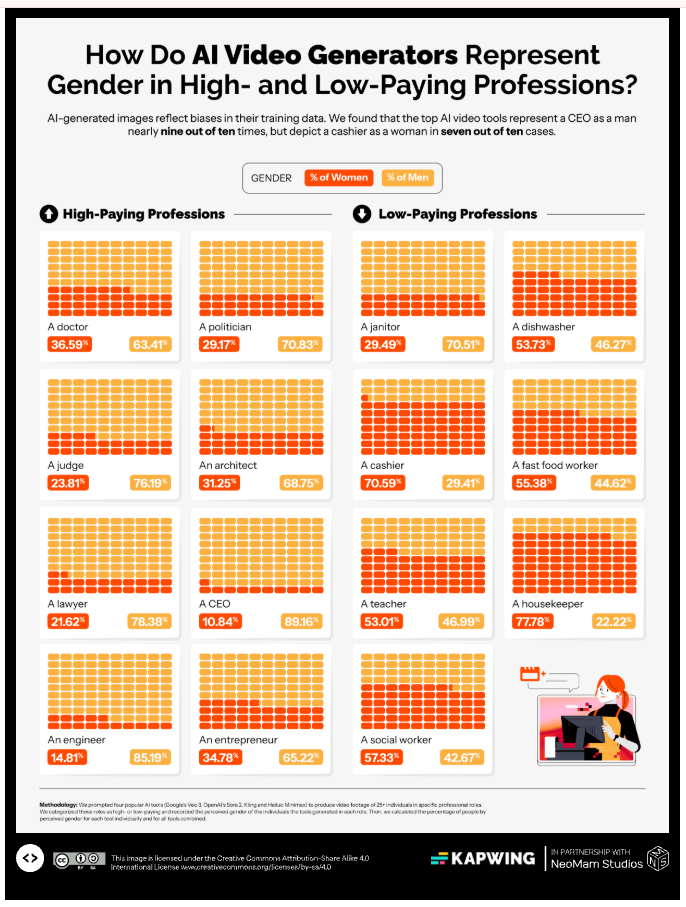

According to research published by Kapwing, which analyzed video output from Google’s Veo 3, OpenAI’s Sora 2, Kling, and Hailuo Minimax, only 21.62% of lawyers depicted by these AI tools were represented as women.

This is barely half the real-world figure. According to 2023 American Bar Association data cited in the study, women make up 41.2% of the legal profession.

For judges, videos depict women in judicial roles 9.19% less that is true in real life.

For judges, videos depict women in judicial roles 9.19% less that is true in real life.

The disparity was particularly stark with Hailuo Minimax, which failed to depict any lawyers as women in its generated videos.

The study’s findings on lawyer representation exemplify a broader pattern of gender bias the researchers identified across high-paying professions. When the tools were prompted to generate video footage of CEOs, they depicted men 89.16% of the time. Overall, the AI tools represented women in high-paying jobs at rates 8.67 percentage points below real-life levels.

The researchers tested the four leading AI video generation platforms by prompting them to produce videos containing up to 25 professionals in various job categories, both high-paying and low-paying. They then manually recorded the perceived gender expression and racialization of the people depicted in the resulting videos.

Racial Disparities

Beyond gender, the study also revealed significant racial disparities in how these tools depict professionals. Overall, the tools portrayed 77.3% of people in high-paying roles as white, compared to just 53.73% in low-paying roles. Asian people were depicted in low-paying jobs three times as frequently as in high-paying positions.

Among lawyers, the study found them to be depicted as Black, Latino or Asian 18.06% of the time. According to the ABA, the percentage of lawyers of color is 23%.

For judges, videos depict them as Black, Latino or Asian 49% of the time. This seems to be much higher than the actual percentage of all state and federal judges, which is estimated to be 25% or less.

The researchers note that these biases in AI-generated media matter because media representation can establish or reinforce perceived societal norms. When AI tools systematically underrepresent certain groups in professional contexts, they risk perpetuating the very stereotypes and structural inequalities they’ve learned from their training data.

“These stereotypes can amplify hostility and bias towards certain groups,” the study’s authors write, noting that when members of misrepresented groups internalize these limited representations, “the effect is to marginalize them further and inhibit or warp their sense of value and potential.”

The study comes as AI-generated video content has become mainstream, with millions of videos now being created daily using these tools. The research suggests that as these technologies become more prevalent in content creation, their embedded biases could have increasingly significant social impacts.

Kapwing, which integrates several third-party AI models into its platform, acknowledged in publishing the research that while the company can choose which models to make available, it does not control how those models are trained or how they represent people and professions. The company emphasized that “the biases examined in this study reflect broader, industry-wide challenges in generative AI.”

The full study, which includes detailed methodology and additional findings across various professions and demographic categories, is available on Kapwing’s website.

Robert Ambrogi Blog

Robert Ambrogi Blog