Well, it’s happened again: Generative AI has passed a critical test used to measure candidate’s fitness to be licensed as a lawyer.

Back in March, OpenAI’s GPT-4 took the bar exam and passed with flying colors, scoring around the top 10% of test takers.

Now, two of the leading large language models (LLMs) have passed a simulation of the Multistate Professional Responsibility Examination (MPRE), a test required in all but two U.S. jurisdictions to measure prospective lawyers’ knowledge of professional conduct rules.

This time, the test was conducted by researchers at LegalOn Technologies, led by Gabor Melli, VP of artificial intelligence, who concluded that two of the leading generative AI models are capable of passing the legal ethics exam.

“This research advances our understanding of how AI can assist lawyers and helps us assess its current strengths and limitations,” stated Daniel Lewis, U.S. CEO of LegalOn. “We are not suggesting that AI knows right from wrong or that its behavior is guided by moral principles, but these findings do indicate that AI has potential to support ethical decision-making.”

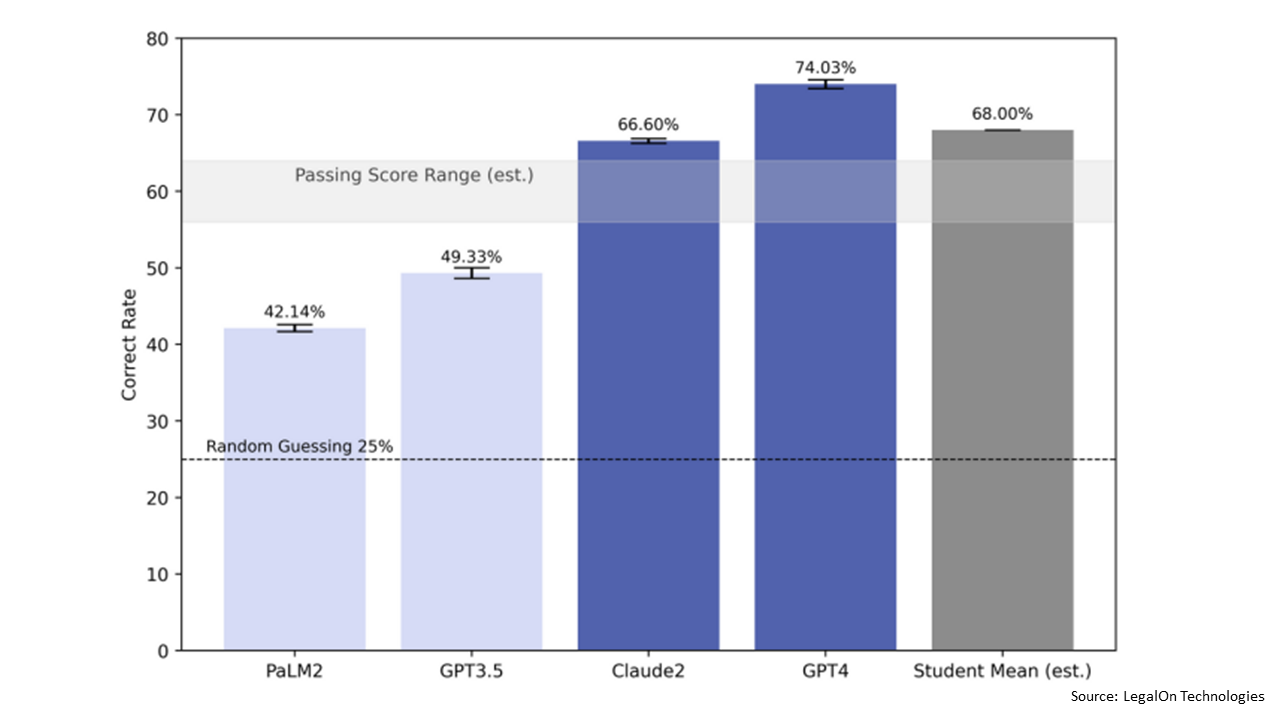

The researchers tested OpenAI’s GPT-4 and GPT-3.5, Anthropic’s Claude 2, and Google’s PaLM 2 Bison, on their ability to correctly answer questions modeled for the MPRE.

GPT-4 performed best, the researchers found, answering 74% of questions correctly and outperforming the average human test-taker by an estimated 6%. Claude 2 answered 67% correctly. GPT-3.5 answered 49% correctly and PaLM 2 answered 42% correctly.

Both GPT-4 and Claude 2 scored above the approximate passing threshold for the MPRE in every state where it is required, a threshold estimated to range between 56-64% depending on the jurisdiction.

The LegalOn researchers tested the LLMs against 500 simulated exam questions created by Dru Stevenson, a law professor who teaches professional responsibility at South Texas College of Law Houston. He designed the questions to have the same format and style as the questions on the current MPRE. Each LLM was tested using a “zero shot” approach, which involves no prior training about legal ethics.

While the researchers concluded that GPT-4 “exhibited remarkable proficiency,” its performance varied by subject area. It performed particularly well in questions related to conflicts of interest and client relationships, and less well on topics such as the safekeeping of funds.

“That AI can pass the legal ethics exam marks a turning point not only for legal technology but also for the practice of law,” said Stevenson. “The responsibility for ethical decisions will always remain firmly with legal professionals, but this study shows the potential for technology to assist the legal community with consistently meeting high ethical standards.”

You can download a copy of the report here.

Robert Ambrogi Blog

Robert Ambrogi Blog