Artificial intelligence tools matched or exceeded human lawyers in producing reliable contract drafts in the first comprehensive benchmarking study comparing AI against legal professionals, according to research published this week.

The study, Benchmarking Humans & AI in Contract Drafting, conducted by LegalBenchmarks.ai, found that human lawyers produced reliable first drafts 56.7% of the time, while several AI products met or exceeded their performance.

The top-performing AI tool, Gemini 2.5 Pro, achieved a 73.3% reliability rate, marginally outperforming the best human lawyer at 70%.

The research evaluated 13 AI tools against human lawyers using 30 real-world contract drafting tasks. The study assessed 450 task outputs and surveyed 72 legal professionals to measure three dimensions of performance: output reliability, usefulness, and workflow integration.

Of the 13 tools evaluated, seven were tools designed specifically for the legal market: August, Brackets, GC AI, InstaSpace, SimpleDocs and Wordsmith. One of the seven was not identified by name, but was described as a “long-standing enterprise legal-ai platform.”

The other six were general commercial tools: ChatGPT (GPT-4.1 and GPT-5), Claude (Opus-4.1), Copilot (free version), Gemini (2.5 Pro), Le Chat(Mistral), and Qwen (qwen3-235b-a22b).

AI Identifies Legal Risks Lawyers Missed

In scenarios involving high legal risks, specialized legal AI tools outperformed general purpose tools, raising explicit risk warnings in 83% of outputs, compared to 55% for general tools. However, in the same scenarios, human lawyers raised no such warnings, according to the study.

“Legal AI tools surfaced material risks that lawyers missed entirely,” the researchers wrote. In one example involving a potentially unenforceable penalty clause under New York law, AI tools flagged enforceability concerns while human lawyers provided no risk assessment.

The findings challenge assumptions about AI’s inability to exercise legal judgment, the researchers said, showing that some AI tools can identify compliance and enforceability issues overlooked by experienced practitioners.

Tools Vary In Performance

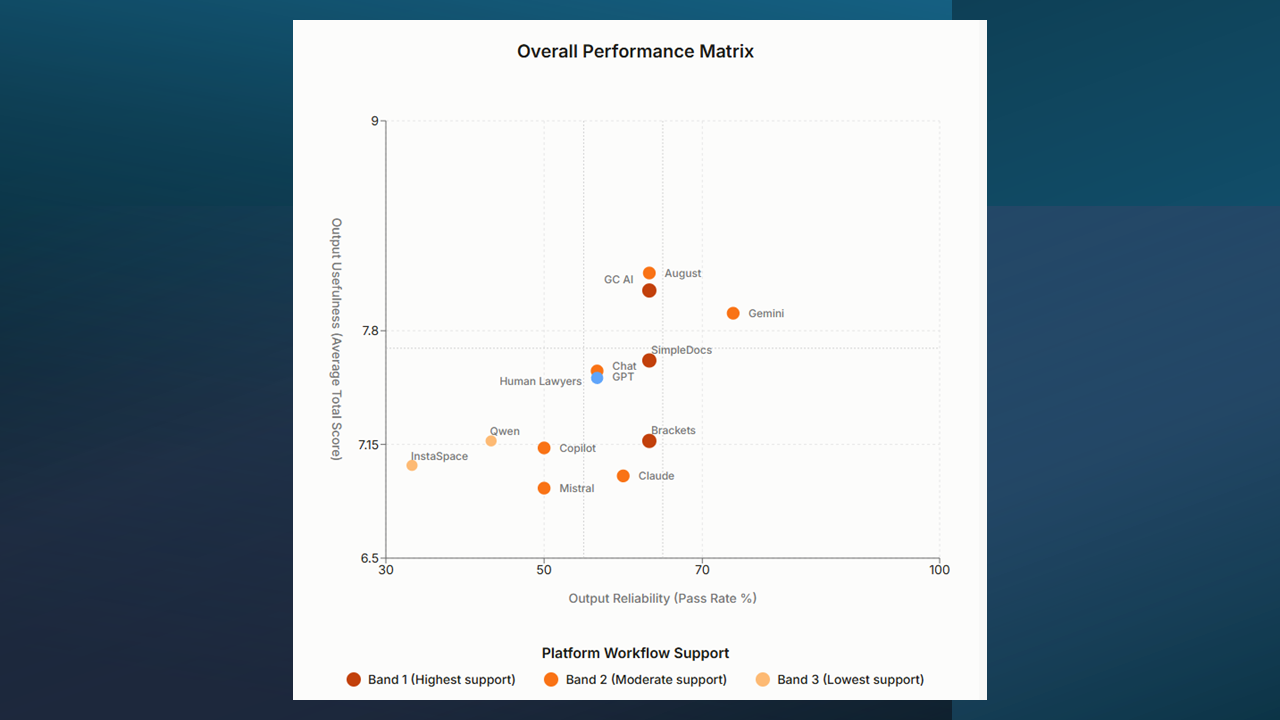

The study revealed substantial variations in AI performance. Reliability rates ranged from 44% to 73.3% across different tools. Google’s Gemini 2.5 Pro achieved the highest reliability score, followed by OpenAI’s GPT-5 at approximately 73%.

Legal AI platforms, including GC AI, Brackets, August, and SimpleDocs, also scored above the overall AI average of 57%. General-purpose AI tools slightly outperformed specialized legal AI platforms on reliability metrics, contrary to what many in the industry might expect.

In usefulness ratings, August led with an average score of 8.13 out of 9 points, while human lawyers averaged 7.53 points. The metric measured clarity, helpfulness and appropriate length of draft outputs.

“Specialized legal AI tools did not meaningfully outperform general-purpose AI tools in both output reliability and usefulness,” the researchers said. “General-purpose AI solutions had a slight edge in output reliability, while legal AI solutions scored marginally higher on output usefulness.”

Workflow Integration As Differentiator

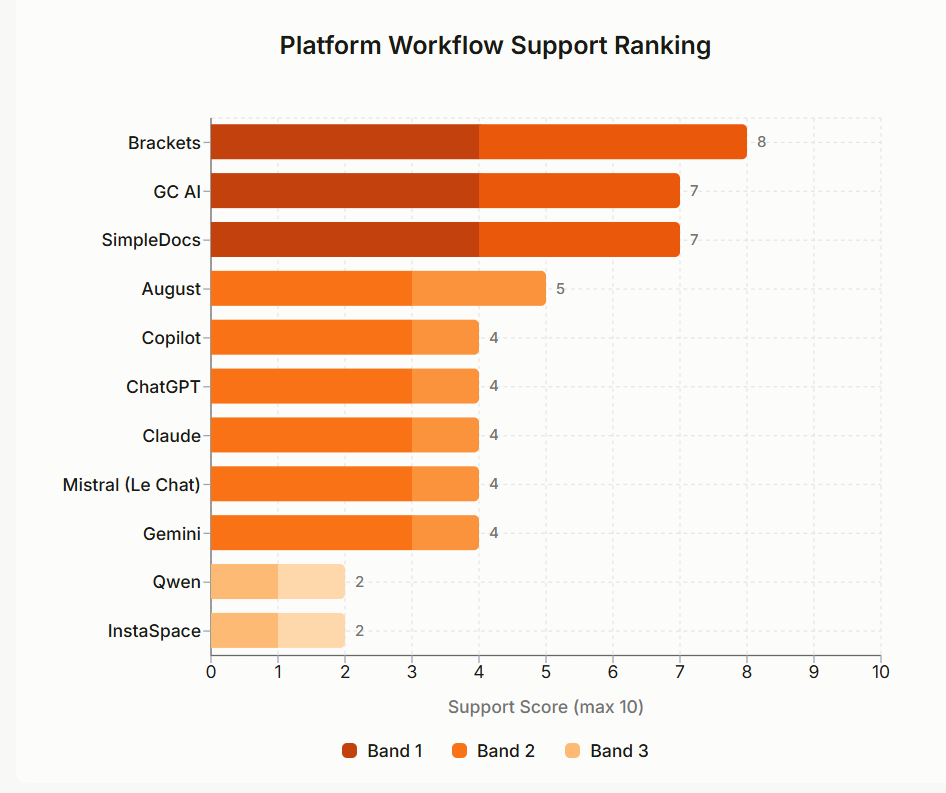

While general-purpose AI tools competed effectively on output quality, specialized legal AI platforms differentiated themselves through workflow integration. Two-thirds of tested legal AI products integrate with Microsoft Word, where most contract drafting occurs.

Brackets, GC AI, and SimpleDocs scored highest on platform workflow support, offering features like template libraries, clause storage, and quality assurance tools designed specifically for legal work.

“Platform Workflow Support is the key differentiator for specialized tools, not output performance,” the researchers concluded.

Humans Better At Complex Tasks

Human lawyers demonstrated clear advantages in tasks requiring commercial judgment and context management. They excelled at interpreting client intent, avoiding unnecessary concessions to counterparties, and integrating multiple information sources.

In one multi-source drafting task requiring integration of templates, term sheets and email communications, only the human lawyer successfully extracted complete party information from a screenshot. All AI outputs contained incomplete or inaccurate company details.

However, AI tools proved more consistent in routine drafting. In a task requiring a 10% penalty clause, all AI tools correctly reproduced the figure while one human lawyer mistakenly wrote “9%.”

The starkest differentiator between humans and AI was in the length of time it took to complete a task, the report said. Humans took nearly 13 minutes per task while AI tools produced outputs in seconds.

Changing Professional Priorities

Among the 72 lawyers surveyed for the study who use AI for legal work, 86% employ multiple tools rather than relying on a single product. Only 6% require 100% accuracy before using AI tools, while 55% expressed comfort with accuracy below 90%.

When asked about factors that would increase AI usage, 35% cited easier output verification as most important, 23% pointed to improved context management, and 21% ranked accuracy gains as the top priority.

“Accuracy is only one of several factors we consider,” one Fortune 500 general counsel told researchers. “Every lawyer is responsible for reviewing the work product they produce, with or without AI.”

Methodology and Limitations

The study evaluated AI tools and human lawyers using identical tasks contributed by practicing attorneys across various industries. Tasks ranged from basic clause drafting to complex commercial arrangements.

The research assessed three performance dimensions: output reliability (factual accuracy and legal adequacy), output usefulness (clarity and helpfulness), and platform workflow support (integration and verification features).

The human baseline consisted of in-house commercial lawyers with an average of 10 years’ experience. Outputs were evaluated through a combination of automated scoring against predefined criteria and expert reviewer assessment.

The researchers acknowledged several limitations, including the snapshot nature of rapidly evolving AI capabilities, the subjective elements in scoring usefulness, and the focus on junior- to mid-level drafting complexity.

Bottom Line

The bottom line seems to be that tasks involving routine, low-risk contract drafting may be the best candidates for using AI, while complex commercial negotiations continue to require human expertise.

“The future of drafting will not be decided by one side or one tool,” the researchers wrote. “It will be shaped by orchestration: combining the speed and consistency of general AI, the workflow fit of legal AI, and the judgment of lawyers. The real advantage will belong to teams that learn to design and manage this collaboration.”

The research was conducted by Anna Guo, Arthur Souza Rodrigues, Mohamed Al Mamari, Sakshi Udeshi and Marc Astbury, with advisory support from legal technology experts and platform evaluation by HumanSignal.

Robert Ambrogi Blog

Robert Ambrogi Blog